Did you know that there’s a loaded dice inside ChatGPT? 🎲

✨ Part 2/5 of the Mini-Series: The Creative Shell – What Makes LLMs “Creative”?

see previous part here

Every time an LLM generates text, it’s rolling a dice 🎲.

But here’s the thing, the dice is loaded 😱 !

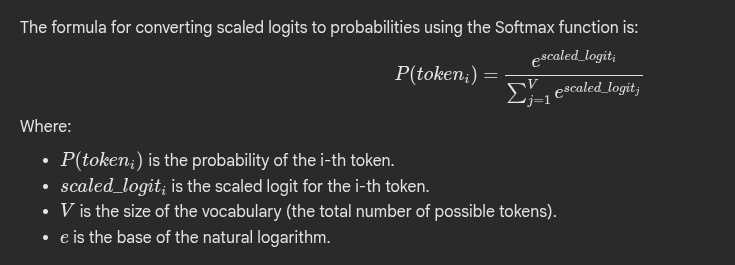

In Part 0 & 1, we explored how temperature scales logits to control creativity. Today we’re going one level deeper: how those scaled logits become the probabilities the model actually samples from.

When the model computes logits for “The sky is …”, you might get:

- “the” →

−93.7 - “blue” →

−94.3 - “falling” →

−94.5 - “a” →

−94.8

These are just raw scores. You can’t sample from them yet.

Here “Softmax” comes to play (see image 👇). It does two elegant things:

1️⃣ Takes e^(each scaled logit) → converts negatives to positives

2️⃣ Divides by the sum → forces everything to add up to 100%

Now we have a probability distribution—the model’s loaded dice! 🎲

Looking at the image below, notice the effect of the Temperature:

🔹 LOW TEMPERATURE (0.5) ➡️ Peaked Distribution:

“the”: 57.30% ← Clear winner

“blue”: 18.20%

“falling”: 13.09%

“a”: 6.36%

Result? More than 50% chance of picking “the.”. Leads to predictable model.

🔹 HIGH TEMPERATURE (2.0) ➡️ Flat Distribution:

- “the”:

1.21% - “blue”:

0.90% - “falling”:

0.83% - “a”:

0.70%

Result? No dominant choice. Many words compete. Leads to unpredictable model (sometimes chaotic).

🧠 The mind-bending part is that the model never “chooses” words deterministically. It:

1️⃣ Creates a dice 🎲 (probability distribution) using the softmax function

2️⃣ Randomly samples from it

Think of it like this:

– Low temp = heavily weighted dice (predictable rolls)

– High temp = balanced dice (wild rolls)

That’s why:

✅ Same prompt ≠ same output

✅ There’s randomness in every response

✅ Temperature doesn’t make the model “smarter”—it changes how the dice is weighted

The dice is always there. Temperature just loads it differently.

In part 3, we’ll shape and carve that dice even further

🤔 What’s been your most surprising “dice roll” from an LLM?

♻️ Repost if this changed how you think about LLMs

One thought on “The Dice inside ChatGPT”